Here we provide some background and suggestions to support our dciWebMapper users to prepare and design their usability and utility testing for their web map(s) applying our dciWebMapper framework.

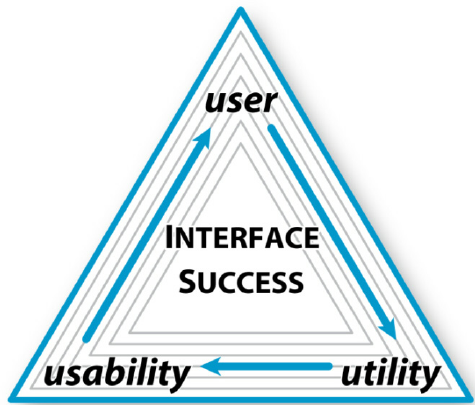

Utility describes what an interface can do and how useful the functionalities provided by the interface are for users to complete their objectives. Usability is how easy and pleasant these functionalities are for users to use. A

successful visualization tool often has high usability and high utility (Grinstein et al., 2003), (Janicki et al., 2016). For a web map application (app), it is essential to consider users’ needs and expectations, because multi-dimensional

spatial information can pose challenges for untrained users (Zuo et al., 2019). Roth et al. (2015) emphasized that the “user” should be given first attention before utility and usability. Roth et al. (2015) further proposed the Three U’s of

Interface Success (see the figure below), which emphasize the importance of considering all three elements within user→utility→usability loops. The feedback also should be “in a loop” so that the web app is overseen in all stages (Roth & Harrower,

2008). Our GeoAIR Lab members have assessed the web apps developed for the case studies iteratively (i.e., repeatedly and often) during the development and design process to ensure the app’s usability. Although the feedback loop is applied,

it is still important to do the utility and usability tests after completing the web app. Roth et al. (2017) emphasized the importance of comprehensive user-centered case studies, which provide consistent and detailed descriptions of designing

and evaluating a given interactive map. This also is a significant way to produce transferable and contextual insights on interaction design and use. Section 2 below provides an exemplary plan to evaluate the case studies web maps for our

dciWebMapper framework users to reference when they design their web map’s usability and utility testing.

Figure 1. The Three U’s of Interface Success (Roth et al., 2015).

Usability testing does not require an enormous sample size; it often requires three to ten participants (Roth & Harrower, 2008). Research indicates that 5 users are enough to uncover 80% of usability problems (Virzi, 1992; Neilsen & Landauer,

1993). Some researchers suggest other numbers. For example, the experiment could recruit 20 participants across a university(e.g., students, faculty, and/or staff could be included in the participant pool). Participants would vary in age,

background, computer skills, and/or previous experience in using and/or developing web maps. They would be randomly assigned to either the web mapping application group (first group, 10 participants) or no web mapping application group (second

group, 10 participants). This would help ensure that any performance differences are not due to pre-existing differences between the two groups. The first group will participate in the experimental usability testing of the web map applications

by applying our dciWebMapper framework. The main purpose of the second group is for comparative usability testing. The participants in the second group could use the traditional method (the dataset used for the web map applications,

without the web map app interface) to complete the same tasks. For iTRELISmap, the second group is asked to use the TRELIS website to search for scholars and complete the tasks. The experiment will include quantitative and qualitative questionnaires.

Usability testing does not require an enormous sample size; it often requires three to ten participants (Roth & Harrower, 2008). Research indicates that 5 users are enough to uncover 80% of usability problems (Virzi, 1992; Neilsen & Landauer,

1993). Some researchers suggest other numbers. For example, an experiment could recruit 20 participants across a university(e.g., students, faculty, and/or staff could be included in the participant pool). Participants would vary in age, background,

computer skills, and/or previous experience in using and/or developing web maps. They would be randomly assigned to either the web map app group (first group, 10 participants) or no web map app group (second group, 10 participants). This would

help ensure that any performance differences are not due to pre-existing differences between the two groups. The first group will participate in the experimental usability testing of the web map apps using our dciWebMapper framework.

The main purpose of the second group is for comparative usability testing. The participants in the second group could use the traditional method (the dataset used for the web map apps, without the web map app interface) to complete the same

tasks. For iTRELISmap, the second group could be asked to use the TRELIS website to search for scholars and complete the same tasks from the first group.

An exemplary draft of quantitative and qualitative questionnaires is designed by using the iTRELISmap web application as an example.For the qualitative questionnaires, we suggest the following tasks we designed (note that the difficulty

level of the tasks varies):

All five tasks are suggested to follow with confidence and preference ratings:

The following Likert scale questions (Likert, 1987) consist of ten statements from the standard questionnaires SUS (System Usability Scale) (Brook, 1996):